Nandini Roy Choudhury, writer

Brief news

- The family of Genesis Giovanni Mendoza-Martinez, who died in a 2023 Tesla crash, has sued Tesla for “fraudulent misrepresentation” regarding its Autopilot system, claiming it contributed to the accident.

- Tesla has transferred the lawsuit to federal court, asserting the driver’s negligence was the primary cause of the incident, while the Mendoza family alleges misleading claims about Autopilot.

- The National Highway Traffic Safety Administration is investigating Tesla’s Autopilot, amid concerns over its marketing and safety, as rival companies advance in autonomous vehicle technology.

Detailed news

The family of a driver who was killed in a crash in 2023 has filed a lawsuit against Tesla, claiming that the company’s “fraudulent misrepresentation” of its Autopilot system was the cause of the victim’s death.

The driver of the Tesla, Genesis Giovanni Mendoza-Martinez, was pronounced dead at the scene of the accident in Walnut Creek, California, which involved a Model S vehicle. In the event that he was a passenger at the time of the accident, his brother Caleb sustained significant injuries.

In October, the Mendoza family filed a lawsuit against Tesla in Contra Costa County. However, in recent days, Tesla has gotten the case transferred from state court to federal court in the Northern District of California. It was The Independent that broke the news about the change in location. In the case of fraud accusations brought before federal courts, the burden of proof is typically placed on the plaintiff.

As the driver of a 2021 Model S was operating Tesla’s Autopilot, a largely automated driving system, the vehicle collided with a fire truck that was parked. The accident occurred while the driver was operating the vehicle.

It was stated by the counsel for Mendoza that Tesla and Musk have been exaggerating or making misleading promises regarding the Autopilot technology for a number of years in order to “generate excitement about the company’s vehicles and thereby improve its financial condition.” Specifically, they referred to tweets, blog postings made by the corporation, comments made during earnings calls, and interviews with the press.

The attorneys for Tesla stated in their response that the incident was caused by the driver’s “own negligent acts and/or omissions,” and that the driver’s “reliance on any representation made by Tesla, if any, was not a substantial factor” in causing harm to either the driver or the passenger. Specifically, they assert that Tesla’s automobiles and systems have a “reasonably safe design,” which is in accordance with both state and federal regulations.

Demands for comments from Tesla regarding the matter were not met with a response. The attorney who is defending the Mendoza family, Brett Schreiber, did not agree to interview his clients and declined to make them accessible for an interview.

In addition to the aforementioned fifteen active cases, there are at least fifteen other cases that are centered on similar allegations involving Tesla accidents in which Autopilot or its Full Self-Driving (Supervised) system had been utilized in the moments leading up to a death or injury accident. There have been three of them cases transferred to the federal courts. The fully autonomous driving system (FSD) is the premium version of Tesla’s partially automated software. The owners of Tesla vehicles pay an upfront cost or subscribe on a monthly basis in order to use FSD, despite the fact that Autopilot is a standard option in all new Tesla vehicles.

As part of a larger investigation of Tesla Autopilot that was launched by the National Highway Traffic Safety Administration in August 2021, the collision that is at the focus of the Mendoza-Martinez case has also been made a part of that inquiry. During the course of that inquiry, Tesla made modifications to its systems, consisting of a multitude of software updates that were delivered over the air transmission.

The government agency has initiated a second investigation, which is now being carried out, with the purpose of determining whether or not Tesla’s “recall remedy” to address problems with the behavior of Autopilot around stationary first responder cars was successful.

NHTSA has issued a warning to Tesla, stating that the company’s social media posts could lead drivers to believe that its automobiles are robotaxis. A lawsuit has also been filed against Tesla by the California Department of Motor Vehicles, which asserts that the company’s assertions regarding Autopilot and FSD constitute misleading advertising.

Currently, Tesla is in the process of distributing a new version of FSD to its consumers. Over the course of the weekend, Musk gave instructions to his more than 206.5 million followers on X, instructing them to “Demonstrate Tesla self-driving to a friend tomorrow.” He also stated that “It feels like magic.”

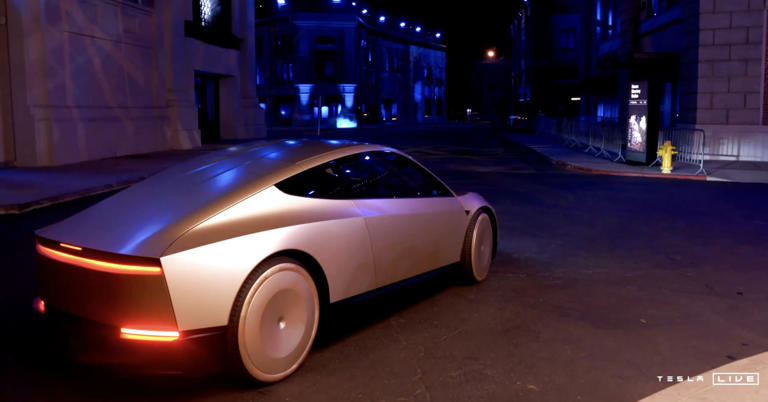

Since around 2014, Musk has been assuring investors that Tesla’s automobiles will soon be able to drive themselves, without the need for a human driver to be there. Tesla has not yet manufactured a robotaxi, despite the fact that the company has demonstrated a design idea for an autonomous two-seater vehicle that is called the CyberCab.

In the meantime, rival companies such as WeRide and Pony.ai in China, as well as Waymo, which is owned by Alphabet, in the United States, are already operating commercial robotaxi fleets and providing various services.

Source : CNBC news